| Category | Sub-category | Variable | Dim. | Type | Range | Description |

|---|---|---|---|---|---|---|

| Global | Scene | scene |

(1,) | D | 6 types | Scene name/identifier |

| Global | Scene | gravity |

(1,) | C | - | Acceleration of gravity |

| Global | Object | render_asset |

(1,) | D | 90 types | Specifies visual appearance |

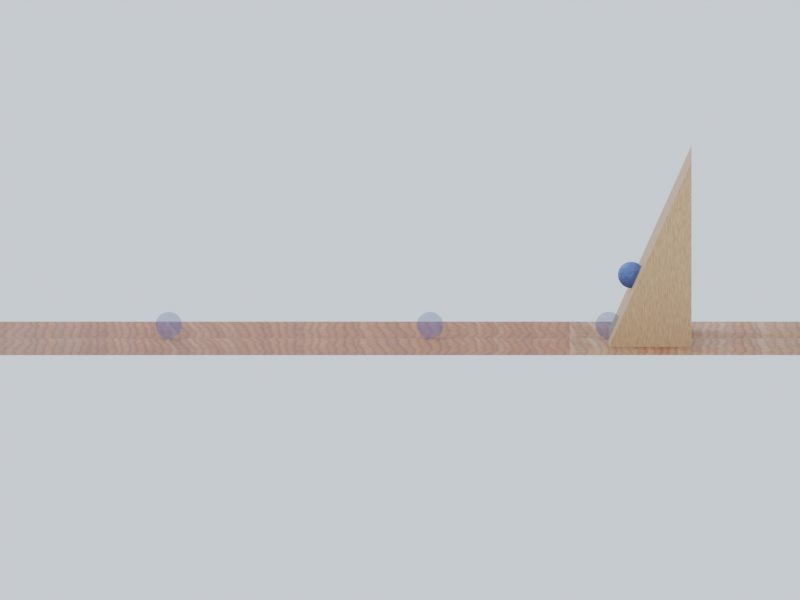

| Dynamic | Object | position |

(T,3) | C | - | 3D coordinates across time |

| Dynamic | Object | rotation |

(T,3) | C | - | Euler angles across time |

CausalVerse

Benchmarking Causal Representation Learning with Configurable High-Fidelity Simulations